|

MODFLOW 6

version 6.8.0.dev0

USGS Modular Hydrologic Model

|

|

MODFLOW 6

version 6.8.0.dev0

USGS Modular Hydrologic Model

|

Data Types | |

| type | parallelsolutiontype |

Functions/Subroutines | |

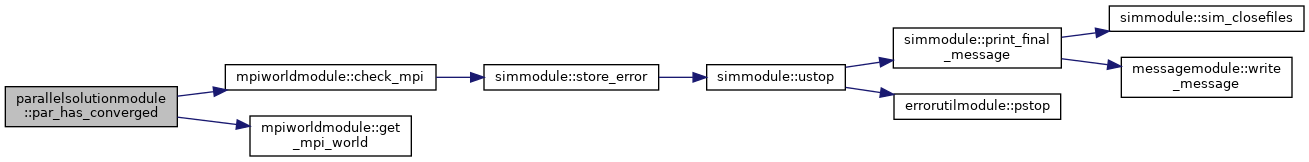

| logical(lgp) function | par_has_converged (this, max_dvc) |

| Check global convergence. The local maximum dependent variable change is reduced over MPI with all other processes. More... | |

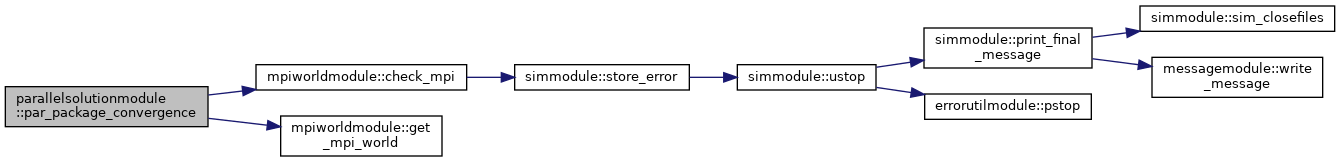

| integer(i4b) function | par_package_convergence (this, dpak, cpakout, iend) |

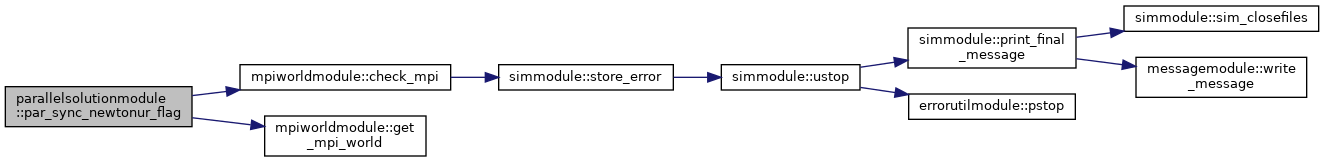

| integer(i4b) function | par_sync_newtonur_flag (this, inewtonur) |

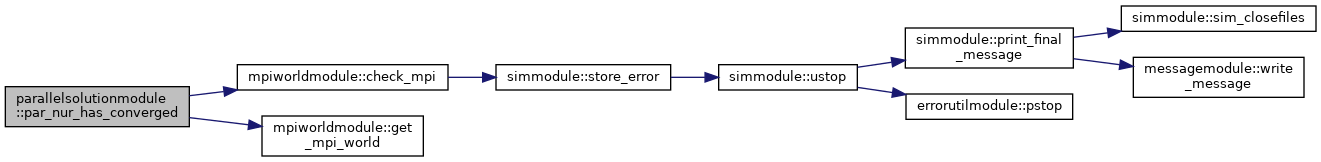

| logical(lgp) function | par_nur_has_converged (this, dxold_max, hncg) |

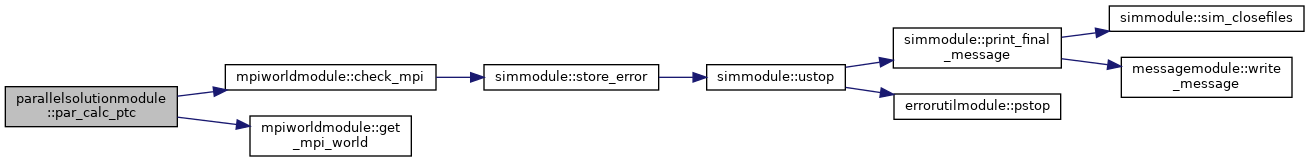

| subroutine | par_calc_ptc (this, iptc, ptcf) |

| Calculate pseudo-transient continuation factor. More... | |

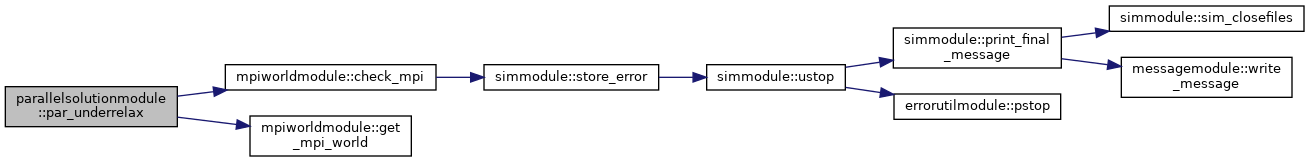

| subroutine | par_underrelax (this, kiter, bigch, neq, active, x, xtemp) |

| apply under-relaxation in sync over all processes More... | |

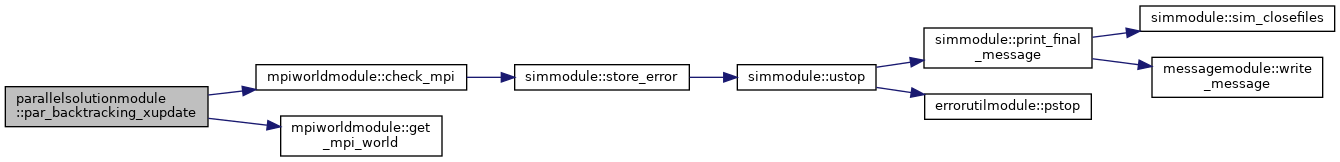

| subroutine | par_backtracking_xupdate (this, bt_flag) |

| synchronize backtracking flag over processes More... | |

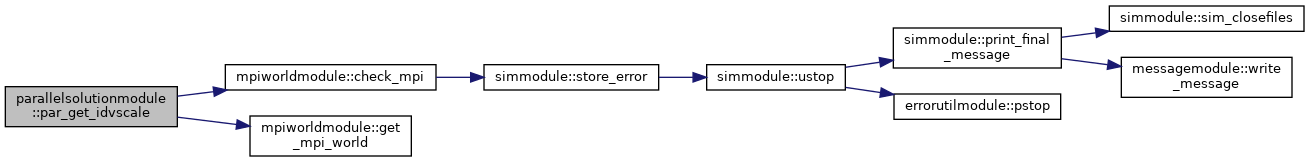

| integer(i4b) function | par_get_idvscale (this) |

| synchronize idvscale flag over processes More... | |

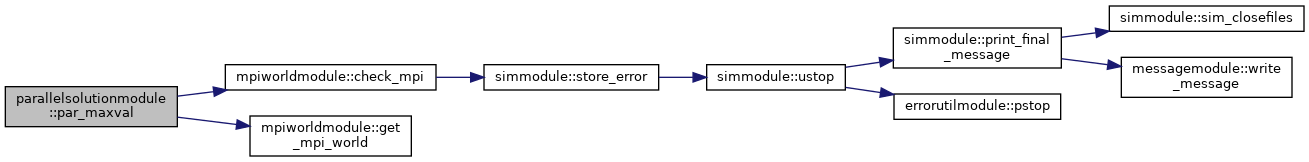

| subroutine | par_maxval (this, nsize, v, vmax) |

| synchronize maxval over processes More... | |

|

private |

| [in,out] | this | ParallelSolutionType instance |

| [in,out] | bt_flag | global backtracking flag (1) backtracking performed (0) backtracking not performed |

Definition at line 220 of file ParallelSolution.f90.

|

private |

| this | parallel solution |

| iptc | PTC (1) or not (0) |

| ptcf | the (global) PTC factor calculated |

Definition at line 147 of file ParallelSolution.f90.

|

private |

| this | ParallelSolutionType instance |

Definition at line 252 of file ParallelSolution.f90.

|

private |

| this | parallel solution |

| max_dvc | the LOCAL maximum dependent variable change |

Definition at line 40 of file ParallelSolution.f90.

|

private |

| this | ParallelSolutionType instance | |

| [in] | nsize | length of vector |

| [in] | v | input vector |

| [in,out] | vmax | maximum value |

Definition at line 275 of file ParallelSolution.f90.

|

private |

| this | parallel solution instance | |

| [in] | dxold_max | the maximum dependent variable change for cells not adjusted by NUR |

| [in] | hncg | largest dep. var. change at end of Picard iter. |

Definition at line 113 of file ParallelSolution.f90.

|

private |

| this | parallel solution instance | |

| [in] | dpak | Newton Under-relaxation flag |

Definition at line 67 of file ParallelSolution.f90.

|

private |

| this | parallel solution instance | |

| [in] | inewtonur | local Newton Under-relaxation flag |

Definition at line 94 of file ParallelSolution.f90.

|

private |

| this | parallel instance | |

| [in] | kiter | Picard iteration number |

| [in] | bigch | maximum dependent-variable change |

| [in] | neq | number of equations |

| [in] | active | active cell flag (1) |

| [in,out] | x | current dependent-variable |

| [in] | xtemp | previous dependent-variable |

Definition at line 181 of file ParallelSolution.f90.