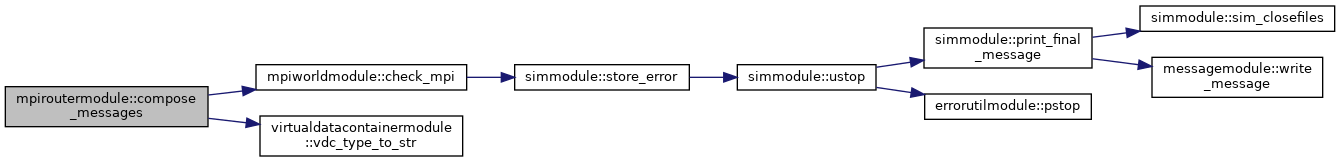

Constructs the message bodies' MPI datatypes for a unit (a solution) and a given stage. This is done in a sequence of 6 steps (distributed over 3 phases):

step 1: Receive headers from remote addresses requesting data from virtual data containers (models, exchanges, ...) local to this process step 2: Send headers to remote addresses to indicate for which virtual data containers (models, exchanges, ...) data is requested

step 3: Based on the received headers, receive element maps (which elements are to be sent from a contiguous array) for outgoing data step 4: Send element maps to remote addresses to specify incoming data

step 5: Construct the message receive body based on the virtual data items in the virtual data containers, and cache

step 6: Construct the message send body, based on received header data and and maps, from the virtual data containers, and cache

380 class(MpiRouterType) :: this

382 integer(I4B) :: stage

383 integer,

dimension(:) :: body_snd_t

384 integer,

dimension(:) :: body_rcv_t

386 integer(I4B) :: i, j, k

390 integer,

dimension(:),

allocatable :: rcv_req

391 integer,

dimension(:),

allocatable :: snd_req

392 integer,

dimension(:, :),

allocatable :: rcv_stat

394 integer(I4B) :: max_headers

395 type(VdcHeaderType),

dimension(:, :),

allocatable :: headers

396 integer,

dimension(:),

allocatable :: hdr_rcv_t

397 integer,

dimension(:),

allocatable :: hdr_snd_t

398 integer,

dimension(:),

allocatable :: hdr_rcv_cnt

400 type(VdcReceiverMapsType),

dimension(:, :),

allocatable :: rcv_maps

401 integer,

dimension(:),

allocatable :: map_rcv_t

402 integer,

dimension(:),

allocatable :: map_snd_t

405 allocate (rcv_req(this%receivers%size))

406 allocate (snd_req(this%senders%size))

407 allocate (rcv_stat(mpi_status_size, this%receivers%size))

410 rcv_req = mpi_request_null

411 snd_req = mpi_request_null

414 max_headers =

size(this%rte_models) +

size(this%rte_exchanges)

415 allocate (hdr_rcv_t(this%receivers%size))

416 allocate (hdr_snd_t(this%senders%size))

417 allocate (headers(max_headers, this%receivers%size))

418 allocate (hdr_rcv_cnt(this%receivers%size))

421 allocate (map_snd_t(this%senders%size))

422 allocate (map_rcv_t(this%receivers%size))

423 allocate (rcv_maps(max_headers, this%receivers%size))

425 if (this%enable_monitor)

then

426 write (this%imon,

'(2x,a)')

"== communicating headers =="

430 do i = 1, this%receivers%size

431 rnk = this%receivers%at(i)

432 if (this%enable_monitor)

then

433 write (this%imon,

'(4x,a,i0)')

"Ireceive header from process: ", rnk

435 call this%message_builder%create_header_rcv(hdr_rcv_t(i))

436 call mpi_irecv(headers(:, i), max_headers, hdr_rcv_t(i), rnk, stage, &

437 this%mpi_world%comm, rcv_req(i), ierr)

442 do i = 1, this%senders%size

443 rnk = this%senders%at(i)

444 if (this%enable_monitor)

then

445 write (this%imon,

'(4x,a,i0)')

"send header to process: ", rnk

447 call this%message_builder%create_header_snd(rnk, stage, hdr_snd_t(i))

448 call mpi_isend(mpi_bottom, 1, hdr_snd_t(i), rnk, stage, &

449 this%mpi_world%comm, snd_req(i), ierr)

454 call g_prof%start(

"MPI_WaitAll ("//trim(stg_to_str(stage))//

")", &

455 this%tmr_mpi_wait(stage, unit + 1))

456 call mpi_waitall(this%receivers%size, rcv_req, rcv_stat, ierr)

457 call g_prof%stop(this%tmr_mpi_wait(stage, unit + 1))

461 rcv_req = mpi_request_null

462 snd_req = mpi_request_null

465 do i = 1, this%receivers%size

466 call mpi_get_count(rcv_stat(:, i), hdr_rcv_t(i), hdr_rcv_cnt(i), ierr)

468 if (this%enable_monitor)

then

469 rnk = this%senders%at(i)

470 write (this%imon,

'(4x,a,i0)')

"received headers from process: ", rnk

471 write (this%imon,

'(6x,a)')

"expecting data for:"

472 do j = 1, hdr_rcv_cnt(i)

473 write (this%imon,

'(6x,a,i0,a,a)')

"id: ", headers(j, i)%id, &

474 " type: ", trim(vdc_type_to_str(headers(j, i)%container_type))

475 write (this%imon,

'(6x,a,99i6)')

"map sizes: ", headers(j, i)%map_sizes

481 do i = 1, this%receivers%size

482 call mpi_type_free(hdr_rcv_t(i), ierr)

484 do i = 1, this%senders%size

485 call mpi_type_free(hdr_snd_t(i), ierr)

488 if (this%enable_monitor)

then

489 write (this%imon,

'(2x,a)')

"== communicating maps =="

493 do i = 1, this%receivers%size

494 do j = 1, hdr_rcv_cnt(i)

495 call rcv_maps(j, i)%create(headers(j, i)%map_sizes)

500 do i = 1, this%receivers%size

501 rnk = this%receivers%at(i)

502 if (this%enable_monitor)

then

503 write (this%imon,

'(4x,a,i0)')

"Ireceive maps from process: ", rnk

506 call this%message_builder%create_map_rcv(rcv_maps(:, i), hdr_rcv_cnt(i), &

508 call mpi_irecv(mpi_bottom, 1, map_rcv_t(i), rnk, stage, &

509 this%mpi_world%comm, rcv_req(i), ierr)

514 do i = 1, this%senders%size

515 rnk = this%senders%at(i)

516 if (this%enable_monitor)

then

517 write (this%imon,

'(4x,a,i0)')

"send map to process: ", rnk

520 call this%message_builder%create_map_snd(rnk, stage, map_snd_t(i))

521 call mpi_isend(mpi_bottom, 1, map_snd_t(i), rnk, stage, &

522 this%mpi_world%comm, snd_req(i), ierr)

527 call g_prof%start(

"MPI_WaitAll ("//trim(stg_to_str(stage))//

")", &

528 this%tmr_mpi_wait(stage, unit + 1))

529 call mpi_waitall(this%receivers%size, rcv_req, rcv_stat, ierr)

530 call g_prof%stop(this%tmr_mpi_wait(stage, unit + 1))

534 if (this%enable_monitor)

then

535 do i = 1, this%receivers%size

536 rnk = this%receivers%at(i)

537 write (this%imon,

'(4x,a,i0)')

"received maps from process: ", rnk

538 do j = 1, hdr_rcv_cnt(i)

539 write (this%imon,

'(6x,a,i0,a,a)')

"id: ", headers(j, i)%id, &

540 " type: ", trim(vdc_type_to_str(headers(j, i)%container_type))

541 do k = 1, nr_vdc_element_maps

542 write (this%imon,

'(8x,i0, a,i0)') k,

" nr. elements: ", &

543 rcv_maps(j, i)%el_maps(k)%nr_virt_elems

544 if (rcv_maps(j, i)%el_maps(k)%nr_virt_elems > 0)

then

545 write (this%imon,

'(8x,*(i6))') &

546 rcv_maps(j, i)%el_maps(k)%remote_elem_shift

554 do i = 1, this%receivers%size

555 call mpi_type_free(map_rcv_t(i), ierr)

557 do i = 1, this%senders%size

558 call mpi_type_free(map_snd_t(i), ierr)

561 if (this%enable_monitor)

then

562 write (this%imon,

'(2x,a)')

"== composing message bodies =="

566 do i = 1, this%senders%size

567 rnk = this%senders%at(i)

568 if (this%enable_monitor)

then

569 write (this%imon,

'(4x,a,i0)')

"build recv body for process: ", rnk

572 call this%message_builder%create_body_rcv(rnk, stage, body_rcv_t(i))

573 call this%msg_cache%put(unit, rnk, stage, mpi_bdy_rcv, body_rcv_t(i))

577 do i = 1, this%receivers%size

578 rnk = this%receivers%at(i)

579 if (this%enable_monitor)

then

580 write (this%imon,

'(4x,a,i0)')

"build send body for process: ", rnk

583 call this%message_builder%create_body_snd( &

584 rnk, stage, headers(1:hdr_rcv_cnt(i), i), &

585 rcv_maps(:, i), body_snd_t(i))

586 call this%msg_cache%put(unit, rnk, stage, mpi_bdy_snd, body_snd_t(i))

590 do i = 1, this%receivers%size

591 do j = 1, hdr_rcv_cnt(i)

592 call rcv_maps(j, i)%destroy()

596 deallocate (rcv_req, snd_req, rcv_stat)

597 deallocate (hdr_rcv_t, hdr_snd_t, hdr_rcv_cnt)

599 deallocate (map_rcv_t, map_snd_t)

600 deallocate (rcv_maps)